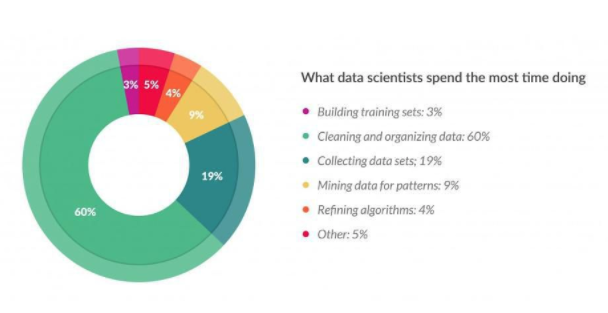

What are the things keeping analysts and data scientists from productivity? According to studies, it’s wrangling data. Vast volumes of data need to be sourced, collected, organized and cleansed to be useful in

solving problems. In an

article by Forbes, it is estimated that about 80 percent of the time spent working with data was in collecting and preparing it for analysis. This includes identifying data sources, extracting the information, preparing the data for loading, and validating or cleansing the information. Another article from

Dataconomy cites that most of an analyst’s time daily is devoted to data preparation. In some cases, the daunting investment of time to get data in a usable state has been a complete roadblock to making use of available information.

What can be done to improve the efficiency of analysts and data scientists suffering through the tedium of the daily care and feeding of large datasets? Two answers bubble to the top: sharing and tools. Tools such can dramatically cut down on the time and cost of data preparation. Sharing data through the reduction of data silos allows tested data preparation techniques to be reused, reducing time and rework.

Reuse and sharing of rules and processes provides a great deal of value. Whether algorithmically massaged or manually manipulated for quality and standardization, un-siloing these efforts increases their value to the organization. Every reuse allows for further insight with less effort invested.

When sharing informally, opt for sharing processes rather than data sets since data becomes stale and it can be impossible to determine how that data was manipulated and if errors were made. When sharing processes, be sure to comment and understand any business rules that are being applied. Different use cases have different needs and you don’t want to inadvertently propagate something that could invalidate or skew your results

Tools for data cleansing include data dictionaries, metadata repositories, and technologies such as machine learning or artificial intelligence to identify data problems and characteristics. The idea behind many of these tools is that data is assessed, predictions made, and then predictions are adjusted in an ongoing basis to improve understanding of the data. In the cases of data dictionary or metadata management, this may be crowd sourced among data consumers in an organization such as analysts or data scientists.

Make an effort to take advantage of tools available within your organization. Make friends with other analysts. Have a data brunch, it may be the most bay area thing you ever do.

Below are more key articles about the cost of data preparation and how it may be reduced through the sharing of information:

CIO – You wont clean all that data, so let AI clean it for you

Harvard Business Review – Breaking Down Data Silos

The Verge – The biggest headache in machine learning? Cleaning dirty data off the spreadsheets

Jennifer Mask is a Solutions Architect for Teradata living in San Francisco, CA. She began her career in 1999 in Atlanta, GA and worked with both a major airline and a major soft drink manufacturer before joining the Teradata team in 2007. The focus of her experience working with Teradata and other technologies has been helping business analysts to better use data to answer questions and solve problems at scale. This includes designing, implementing, and supporting applications for analysts as well as training. Prior to moving to California to become a solutions architect, Jennifer was a customer education consultant who specialized in customized learning based student needs and skill sets.

View all posts by Jennifer Mask